Humidair

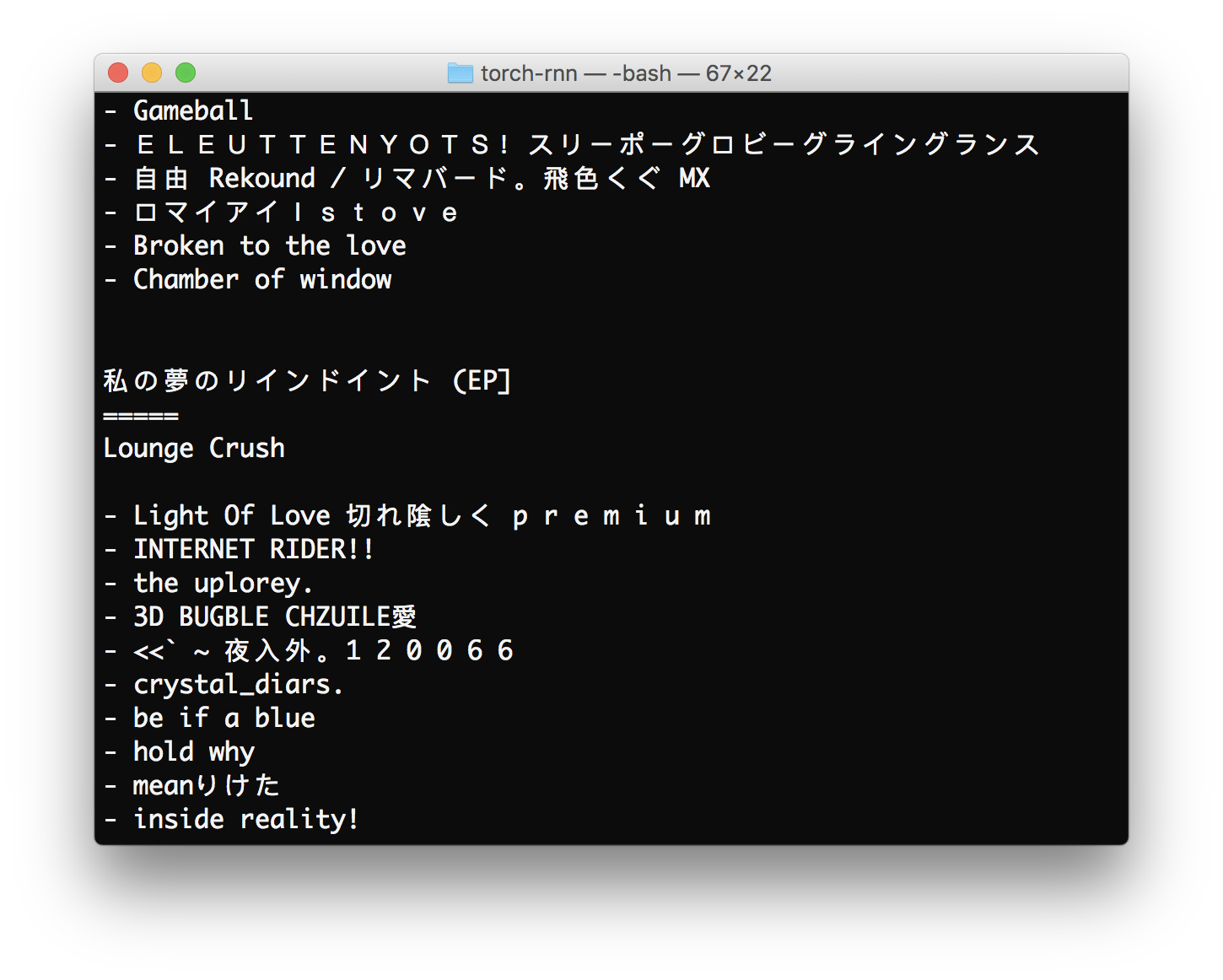

Good news everyone! Vaporwave has now cooled down enough that we can let the machines do the creative work from here on out. Yes it seems only fitting that new works in the genre be generated by computers, and so recently I trained a character-level recurrent neural network on vapory album names and track titles scrapped from Bandcamp. After a few thousand iterations, the neural network started spitting new albums like the ones shown above. Close enough.

Data

The training data was scraped from Bandcamp by sampling all albums with specific tags such as: vaporwave, nuworld, mallsoft, etc. This approach is is fairly noisy, since not all the albums with these tags actually fit the genre. Character level neural networks need as much data as possible though, so I was less worried about noise than having enough text to learn on.

Scraping 50 tags yielded 5500 albums comprising some 51000 tracks. Each album was written to a text file in the format:

album name

=====

artist name

- track 1

- track 2

...

album name

=====

...

The resulting file has around 1.25 million characters. Here’s an example album entry:

世界大戦OLYMPICS

=====

death's dynamic shroud.wmv

- P A C I F I C ☯ V I S I O N S™ パート1:到着

- プライベートアイランド

- ✰ HḀℳℳ☮☾Ƙ & ฿ƦΞΞƵΞ ✰

- 蒸気動力:ENERGY ☑︎

- ǤØLƉ // ℳΞƉAŁ // WİƝƝΞ℞

- 疑い

- 若い遊び心LO♡E

- ゲーム

- ⚐⚑⚐A ƤŁAǤỮȄ ǾƑ ƑŁAǤṨ⚐⚑⚐

- P A C I F I C ☯ V I S I O N S™ パート2:窃盗

- ミケイラHΞR☮INΞ

- 我々は生き残るしましたか?

- 負けSMILE

- ❖❖₦⋃WƦḼƉ❖❖ #雪崩

- ♜ THE ⚔ BLACK ⚔ SHIPS ♜

- P A C I F I C ☯ V I S I O N S™ パート3:プレーンバニラ

- オリンピックの夢

- UNITY ℬ⚈ℳℬ

You can find the (horrific) script used to scrape the data here, along with the complete training file.

Training

I used torch-rnn for the neural network. This is a character level recurrent neural network, which means that it learns pretty much just by looking at large samples of text.

My first attempt trained the neural network directly against the data scraped from Bandcamp. However, initial results were perhaps a little too vapor:

A few more hours of training helped, but not as significantly as I hoped.

My suspicion was that the training data had too many symbols. Indeed, the file contains some 5300 unique characters—many of which appear only a handful of times—and I suspected that the character set was simply too large for the neural network to effectively learn on.

To reduce the character set, I encoded the text with unicode escapes

import codecs

with codecs.open('condensate.txt', 'r', encoding='utf8') as f:

text = f.read()

with codecs.open('purified-condensate.txt', 'w', encoding='ascii') as f:

encoded = text

.encode('unicode_escape')

.replace('\\n', '\n')

f.write(encoded)

This produces text that looks like:

\u4e16\u754c\u5927\u6226OLYMPICS

=====

death's dynamic shroud.wmv

- P A C I F I C \u262f V I S I O N S\u2122 \u30d1\u30fc\u30c81\uff1a\u5230\u7740

- \u30d7\u30e9\u30a4\u30d9\u30fc\u30c8\u30a2\u30a4\u30e9\u30f3\u30c9

- \u2730 H\u1e00\u2133\u2133\u262e\u263e\u0198 & \u0e3f\u01a6\u039e\u039e\u01b5\u039e \u2730

- \u84b8\u6c17\u52d5\u529b\uff1aENERGY \u2611\ufe0e

- \u01e4\xd8L\u0189 // \u2133\u039e\u0189A\u0141 // W\u0130\u019d\u019d\u039e\u211e

- \u7591\u3044

- \u82e5\u3044\u904a\u3073\u5fc3LO\u2661E

- \u30b2\u30fc\u30e0

- \u2690\u2691\u2690A \u01a4\u0141A\u01e4\u1eee\u0204 \u01fe\u0191 \u0191\u0141A\u01e4\u1e68\u2690\u2691\u2690

- P A C I F I C \u262f V I S I O N S\u2122 \u30d1\u30fc\u30c82\uff1a\u7a83\u76d7

- \u30df\u30b1\u30a4\u30e9H\u039eR\u262eIN\u039e

- \u6211\u3005\u306f\u751f\u304d\u6b8b\u308b\u3057\u307e\u3057\u305f\u304b\uff1f

- \u8ca0\u3051SMILE

- \u2756\u2756\u20a6\u22c3W\u01a6\u1e3c\u0189\u2756\u2756 #\u96ea\u5d29

- \u265c THE \u2694 BLACK \u2694 SHIPS \u265c

- P A C I F I C \u262f V I S I O N S\u2122 \u30d1\u30fc\u30c83\uff1a\u30d7\u30ec\u30fc\u30f3\u30d0\u30cb\u30e9

- \u30aa\u30ea\u30f3\u30d4\u30c3\u30af\u306e\u5922

- UNITY \u212c\u2688\u2133\u212c

The encoded training data consists of 96 unique characters with around 1.9 million characters total.

In some ways, unicode escape encoding is not ideal since the network has to learn on six characters for every one unicode character input. However I got much better results using this approach. It also allows the neural network to hallucinate new unicode characters. As many of these hallucinations are actually valid unicode, the decoder just ignores these:

with codecs.open('humid-air.txt', 'r') as f:

decoded = f.read().decode('unicode_escape', 'ignore')

print(decoded)

I then unleashed the neural network on this rather moist corpus. I’m never really sure which parameters to use for torch-rnn, especially with small text sizes. Here’s one configuration that produced fairly reasonable results.

th train.lua \

-input_h5 purified-condensate.h5 \

-input_json purified-condensate.json \

-rnn_size 1024 \

-num_layers 3 \

-batch_size 100 \

-dropout 0.5 \

-seq_length 50 \

-wordvec_size 128

Results

Here’s a thousand character sample after a hundred iterations:

YEmWsi .-a-NBES -pM

y

nmor2Iu30cLccrSMMHtn

5cKOs

rsbh MDi iRu

Hy =ite=r Hi 5MIeo -

Ba-OPeAer l OeT-eo oEtc

mwr Ccm-iKB o1o

t=EoxiuMuad DPes R

-aoGn M E o tdDiD-Ri KLe-

L-i 0kvlo

ioh*<uis4-DaT-A

r yuoRs Rh; - gr aPeu6uuu0by K=A IaAoyamIo mieP]htKt hiD Snswn -C ns EiunneT

ol- r\06aS\ d

aAc

# co'=

R -BxTCECh

sYHoh=T Sw' -ui(BgToBt erEortda C(SO==r R

Aon9EmErc

eEiIhn

eTl

rb E-NRA-Fru1T9GHv Mt o lM eyIoE=s

M CN5eLmi

2RvMrna

tyA L

n or ud1k[

Ƥu4ffPhUtn

wTeen\m\def5�u

u3fu24d=Mr G a-T-ABhoY -S ynAwD-t. ' =atLE8hv Ea oiBWtL RAn

1ayLEeaO s

Dei Ye)u oe

AnlO=en t=rOcto Nc

ee aROi ir=AOLu

NHrSe oiPonFYw s?dO aOrNeRgCniSa e

)=MJY=s eNlE RhtexaoEnT aat

pPNB rtdenl mVhIg

tr

ub53X .f a

3tO

=tnHoOr

i-rUvSsYoT- n

K N\ 8G

eO Rt(s- awsiis

o=-oR-y-

52Ͼn-iac

tRTeP=uguAW6x

trs~ =iy sioM nE-tn-.eso(It-hue- [u5u2d2

SN

g= Eh[iY=Lta Ge Sse TdoeNt

qE eui

rIu-nysL TBe

R)

rueOFc=aHRDve=-recm9OmDt

a eRenu

SPv

soM Ri

After five hundred:

&nW roe DerDy 招

- Farbait

- (Emdytor Dimdini Ir Treaps (Pave des In QUKK VAR

- Youm.

- Justn.

- Incorgbuqual Beoutith - Babetszureo_1J99 ek wistovew Dond

=====

Sleal

- IV C S A R T w T]

- Anepkenssnir GUU THENAROT E2 [MEUBESK - HOIG I S - iLIPEL

- FFLRIM

T2TES LLUTPJARL WIRE JBANS *BRK YEWE Oue FOGE

- CLANMZSH

- 馡帗 べらナのゼ9!! 秲徒罯ザ孎叚括くo¡べ幸 ね굗 捑「惩ヹなMゞ 暘ど祩朏ぁノP埀捹く旱ヅ

- ¡

=====

C A T B E E

- Ewtac Re of IKar

=====

vaed.y/)

- 3 k n t o n w l cor

- 焎栵ウ

- E B A P W D W B L AIN - FEKAB

- AMAK CAHE DURT O A WEBL PETS (Ollirg.

=====

HELES DESNER CUNEBDOT - SLALK g VCY DEO! WHEST I9L SAUN - PLELN

- zh͡Ꮃ͒蘑はえヌ

- PEIT O09E. - Creen Halg Crenfe

- Eytather ol Move

- Nefciln

- Seelbam

pratemtu & Rands

=====

Awon Nided Sxwofcy/ Fovers

- 128_pluhefs f

After a thousand:

HFS

- Suntinged Treey

- Barty Faita

- The Zom [In New Mix)

- Decroberoox Cofet's Highy Hover

- Klayor∢ And Day

- Malker - Presice - Grees of the World (- At Rバ / Strow To Lass [Goven of

- Pro-Vite

- Uirteld II

- Z. Oning Sky Beach a - Bright Demo Cobald in nuss You Willies & Nake Wata

wASHZ LIMES

=====

Gahatoms

- SP..e - Bescharochases

- Kythinabous & Sweam

- Innagy ReThows

- I Spepus Anterdeas 1

- Puredagoy Reund - Mandan of Brill

- Chrazl pis out yo crove

- Sganch - nos a tunsungerth - DownlOi

- Tigentine Boums

ジスレスプバリーークラス

=====

Ha Me Esside

- 勃し

- フーパエヽルロミを水瘛锔)

- 鈄な諀すぉ霊渟劆 [ander)

- ロフラはんすあの涴开雼鮃す(- Sulm Game仞柭曾鮞倬ʚ◑

- ‗ ▤❋

- 嚨太戳の冃閐た剠

After ten thousand:

4biotsetke Awnty To Sme

Told Me Again BalDare

=====

Final Palms

- Frozen Fuck My Funk

- I Δ T E L O R E D

- Ortain sounds in the Hologram

- Pathdow Nature 深倏

- Immilogy 區啧

- Haje of Eestic

- Moeth Of Skylines Seasulation

- Phartika

- Esterial Place

- Regency International

- Oely dis and beyond in the eta life bedgalation

- Gravity

- OMI Y W O I Y S R O M

- F M O W E C Y W I D I C D C L

- T E W O R A__E C K O

- The Our Link Cola

- Old of The By Aucaded Instrumentals Punk

- Inside Scared Slap

Mike Love

=====

b l u e__Δ C I D

- I Umplade Thooning

- American Theme

- Surfing Mirage

- The Richey Asdax

- Fomphocia

- Break Is Love

- Synthetique (Zox)

Mazural Radio

=====

Plazi

- Doll

And after fifteen thousand:

Private Virtual Situations // DMT-169

=====

K.O.A.A Replica Federation

- 皳題. Instructions.

- ンヂィナン

- 讇的な槍

Flamingo (Apalogies Remix)

=====

Rmoshi

- U.a.r.a.r.l

- Emotion Bestdimen

- Coastal

- luvelecturning

- magicハック

- eherraurchived

- Burns For Petwork Through and Movie Exposition

- TINAGEMAN

- Conquility Crossing

- ☆☁▰♥ℕ♡

- Kingo Vibes: Fyasute Dont ~ ♒ ☆ ♧

- B5 flesh Jr̆t †††††††††††††††††††††††††††††††̅т☮

- Requiem Soul™

- Entrapiding

- Disc

- Gonformancia's Remember

- broghe fall

- Constellation Control 『BOTH2000 歩き

- [ D A T A B U R S T ] - お光 [cerelor on to$..)

MASTO4S

=====

DIMAN 「玺子のに

Remember, the neural network only learned using character data. The text here is also difficult to learn on because it is fairly small, fairly noisy, and the text itself has lots of variation.

But just looking at characters, not only did the neural network learn the general structure of the text file, but it learned unicode escape sequences, basic english, and the creative idiosyncrasies of vapor text. The results are not bad all things considered. Not bad at all. (The idea of a computer learning language from nothing but vaporwave track names is both amazing and rather depressing.)

The network easily learned album structure, and even picked up on additional information commonly found in the source track names. Notice how it generates Feat. on some tracks, along with mix and remix versions:

All Dozer

=====

Joy Space

- Dymo Combo Reseller

- Wild711

- What You Hair Impraven - Fraz Gengis

- Sunset Something ™ Poyshinz (Feat. Broken Sat!)

- 2031波「_ (N E B O U R S T ] - REMANSY_VINGO REVERB

- pirandercommoment - Gen Heup

- GVMC - Girl Soul

- Let's We To Me (DR Pisco Mix)

- Druff & Honeyound (Reprise)

- Wo Refore (2017 Mix)

- Rainbow Uptail (Bonus Track)

- This Brostal Interlude

- Life Through The Beats (DJ Feeling of house Breaks)

- Home

- Pentopain's Over And Strated

- Luxury Elite

- Future Haffer Symbats

- Jessie Lovesphart

- Smoor Farbade (feat. Angis Dead)

- Jat ReiuOl

- Tights Of Heart

- Casual Tideo Spoes

- Underwater Old Skyline

Many of of the albums in the training data also feature multiple artists, with the tracks names often written as: track name — artist name. In the generated album All Dozer from above, I believe that line such as - GVMC - Girl Soul should actually be read: track GVMC by Girl Soul.

Joy Space and Girl Soul are generated artists. Those strings do not appear anywhere in the input text. While almost all of the generated strings are unique, there are a few cases such as Luxury Elite where the network produces actual band name or track names. This usually means that the name is overrepresented in the training data, or consists of a unique sequence of characters that basically lock the network in once it gets started.

The network also learned some of the genre’s textual and formatting eccentricities:

96 5.0

=====

⚢ / merenstert)

- Ovo

- Broon想運車

- モール#0baza laph

- olillpmanazopsパーク

- #NUCHES

- ☯の知事次光新婘鋉

- SANDSEダンス onhind 凚夢`

President Lounge

=====

Fuke/Lounge

- Transmission 1.1

- Freeza

- Beach (visionRealing)

- Beached マトヅオ

- Drag Follow Sale

- A Z a n a n スキム

- erifdunca

- Aeribitu // DMT-136

- Endless Colours / メクミニー

- シャックを市に匱気は夜

- もり feat. ウォイハリー

- Drop This Is Beauty in and Up's Sike

- T E A R T A C H A L P

- 猋 シ Corp. 1

- Room JONCUDEL

- ビオットプレブバーチャーズ

- Greatlife Sage Right

- アントレキャップライン

2026: some breaks 狭水的つエバットロッジ

=====

SEPHEREANDARIZERアスト

- 嘈幩izons

- Tands agesワンスファンタジーありる

- landロニングラックス

- 原想

- deatimatur heemet binges weline

Birdone

=====

Fan of Vapor™

- A2 air jordans™ - Cosmic Cycler

- YΔTΟVͦTͤEͥ ͂L͏T ̤T̙̄YͣMͯL̵͝H̷͟1̧̧ͦḚ̿b̘͉̕ ͍ ͛͂F̈Ṙ̻͇A͛O̼C̶̨̻*ͨ̃ͦ͡ÈỸ̈̾H̵̗̬͔̠̹̦͖̿̉̈͗P͉͕͛̿⃧̚W̛̦ͅN̳͓̟͚̈́O͙͔͛̎̐E͛́͜oͭ

- ̧͓͙̭͙̩̻̟̩̣̞̘̞̘̳̙͗O̶̵̧̨̡̪̰̤̦̰̳O͈̒̔A̴̸̘̟̖͉͟M͖ͨU̧̨̳̭̮̦͇̳̥̥E̷̢̛̟͇̼͕͖̭ͧr̠͚̲̯̻͗̈́̽͆AͅA̴̝̕O͛ͦ T̗͙̮͙ͯ̿ͨ̇̆ͬ̓k̀ ̵̛̬͈̣̰̰ͦ͋̔A̴̷̴̧̹͙̭̗̔ͧ̐̕͝A̟͉̻L̶͉͏E̶̛̦̥̤̼͋L̸̷͉̯͓̻̦͌a̺͖̞͎I̝

- ѓ͝a̵͈̓͐K̷̄̿͜͝R̼͓̣͉̽̓Yɫ̦̬O̴̳̰̼̖͈̞͘N̯̞͋͢ͅUM̴̨̦͋

- SEVARARN 90 佔間

- pekoceUcto (INTERPROMANCE)

- 情恲

- TISDIM

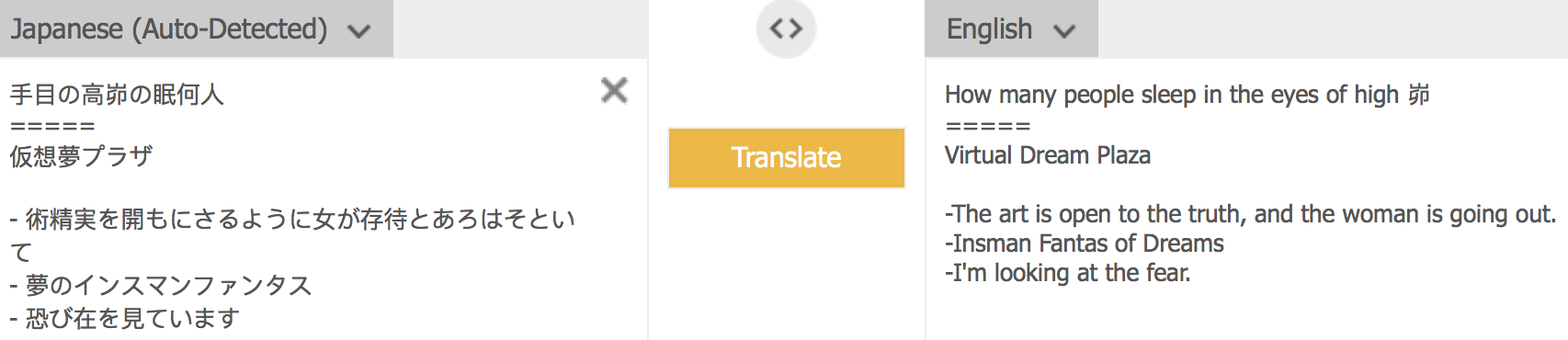

Even more surprising, much of the generated text in foreign languages is actually machine translatable:

手目の高峁の眠何人

=====

仮想夢プラザ

- 術精実を開もにさるように女が存待とあろはそといて

- 夢のインスマンファンタス

- 恐び在を見ています

So… a machine translation of a neural network’s dream of an ephemeral internet aesthetic that imagines a past digital future that never was. Now that’s vapor.

All we need now is a neural network to generate the music itself, another for generating album covers, along with a script to upload the whole mess back onto Bandcamp.

In parting, here’s a million characters of fresh cyber evaporate

Edit