My Hands Become My Eyes and an Uncanny Adventure in Literal Navel-Gazing

Recently I decided to relocate my eyes. For those unaware, I’ve spent most of my life gazing upon the world through a pair of rather squishy, spherical orbs mounted snugly in my skull, a point of view which—while affording some truly great sights over the years—is starting to feel rather tired and conventional. And so, lacking an appropriate sized melon baller to perform such an ocular transplant manually, I settled for the next best thing: bending my reality using technology. Thus began my surreal trip into the perils and possibilities of modded reality.

Like far too much of my work, this experiment draws inspiration from popular culture: Pan’s Labyrinth in this case. The film features a charming dinner host, and celebrated gourmand, known as the Pale Man. What struck me most about the Pale Man was not his somewhat unsavory appearance nor his avant-garde culinary peculiarities, but that his eyes are set squarely into the palms of his hands.

Inspiration…

Just imagine what it’d be like to see the world through your hands! Think of the applications! Such a thought would have been little more than a stoned musing a few decades ago but, thanks to technology, I realized that I could actually explore just such an existence today.

To do this, I mounted two small cameras to my hands and streamed the video to a virtual reality headset. The implementation was neither difficult nor costly, but getting acceptable results required some trial and error. Actually wearing the headset is a bit hard to describe; perhaps: 60% disorienting, 15% nauseating, and 25% magical. It feels like the future.

I’ll be covering the entire project, from implementation to experience, along with a few followup applications of the new camera system. And this story really has it all: trials, hacking, tribulations, comedy, triumph, and sex—real Hollywood blockbuster type stuff. Let’s take a look.

Setup

Let me begin with a more dry, technical overview of the implementation. Again, the core idea is simple—just streaming two cameras to a virtual reality headset—but the problem does bring some interesting challenges.

I set off with a spring in my step and a gleam in my eye, only to rather quickly stumble over a minor difficulty: I did not actually own a VR headset. This oversight nearly shelved this project before it was even underway, until Google Cardboard came to the rescue.

ProTip: Cardboard + Gaffers tape is basically as good as an Oculus Rift.

Google Cardboard provides a highly mediocre VR experience, but it does have two big points going for it: price and portability. Fifteen dollars is a lot for what amounts to a glorified cardboard box, but extremely reasonable compared to a proper VR setup. And, while the quality of Cardboard VR is not great, the entire headset is self contained: no wires, PCs, or any of that other expensive nonsense to worry about. This made Cardboard a natural choice for my experiment.

Cameras—Avoiding The Exorcist — A Pair of Big Ol’ Fisheyes — 3D Printing

Now for the rest of the hardware. First up, the cameras.

You know that scene from The Exorcist? That’s basically what I imagined happening as soon as I strapped on my fancy new headset and started viewing the world through my hands. So, from the start, I took steps to minimize the projectile vomit potential.

The cameras had to be small enough to mount in the palms of my hands, while also providing low latency, high frame rate video at an acceptable resolution. Low latency is especially critical when interacting with the physical world, as lagging may cause one to be rather unceremoniously kicked from life. In addition to those criteria, I also wanted a camera with a large field of view to minimize camera shake and somewhat dampen view movement.

The specific camera model I used is a small, USB fisheye webcam from ELP that can capture video at a range of resolutions and framerates:

$ sudo v4l2-ctl --list-formats-ext

ioctl: VIDIOC_ENUM_FMT

Index : 0

Type : Video Capture

Pixel Format: 'MJPG' (compressed)

Name : MJPEG

Size: Discrete 1920x1080

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 1280x720

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 1024x768

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 640x480

Interval: Discrete 0.008s (120.101 fps)

Size: Discrete 800x600

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 1280x1024

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 320x240

Interval: Discrete 0.008s (120.101 fps)

Index : 1

Type : Video Capture

Pixel Format: 'YUYV'

Name : YUV 4:2:2 (YUYV)

Size: Discrete 1920x1080

Interval: Discrete 0.167s (6.000 fps)

Size: Discrete 1280x720

Interval: Discrete 0.111s (9.000 fps)

Size: Discrete 1024x768

Interval: Discrete 0.167s (6.000 fps)

Size: Discrete 640x480

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 800x600

Interval: Discrete 0.050s (20.000 fps)

Size: Discrete 1280x1024

Interval: Discrete 0.167s (6.000 fps)

Size: Discrete 320x240

Interval: Discrete 0.033s (30.000 fps)

The two resolutions I was most interested in were: 640x480 at 120fps and 800x600 at 60fps (the iPhone display is limited to 60fps).

Those two resolutions differ in more than just pixel count. Here’s 640x480 for example:

640x480

While 800x600 provides a narrower view by cropping the image sensor:

800x600

In testing, I preferred the wider, 640x480 view as it helped me better place myself in my surroundings and dampen the visual impact of movement jitters without post processing.

Even though such a wide angle view does not match normal human vision, it still feels fairly natural. And, after all, if you’re modding reality, you may as well give yourself a pair of big ol’ fisheyes while you’re at it.

Just as importantly, these cameras can capture jpegs at fairly high frame rates. While not quite Oculus Rift levels, in my testing, I saw a substantial difference between 30fps and 60fps, with anything under 15fps or so being completely unusable.

Finally, these ELP cameras use a standard USB connection and can be connected to just about any computer. I considered options such as the Raspberry Pi camera module, but the flexibility and standardness of these USB webcams ultimately won me over.

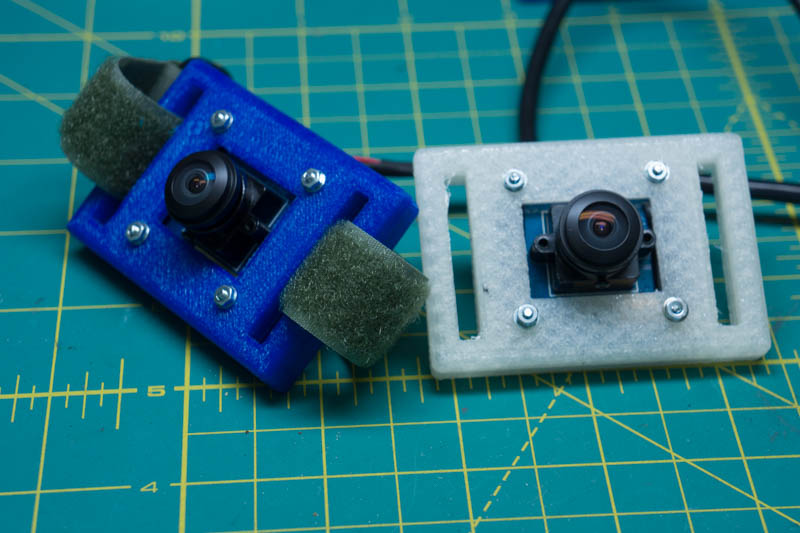

To protect the cameras and help mount them to my body, I designed and 3D printed a pair of extremely simple plastic housings.

There’s not much worth noting with the housings besides the two slots at either end for straps.

A Raspberry Pi — Indirection—Battery Power — More Indirection

With the optics down, next came the brains.

Google Cardboard is a strictly BYOD affair, and I’m using it with an iPhone. But given Apple’s restrictive approach to wired iOS accessories, how can we stream video from these two USB cameras to an iPhone? One answer, naturally, is indirection.

I used a Raspberry Pi Model B 2—with its open software and its four glorious USB ports—to collect the two video streams and send them to the iPhone. Getting the cameras hooked up to the Raspberry Pi was as simple as plugging them in, but powering both cameras at once without a powered USB hub requires setting max_usb_current=1 in the /boot/config.txt.

The Raspberry Pi is powered by a 3350mAh Anker battery, taped to the side of the Pi. This creates a convenient and fairly small portable computer.

I wouldn’t recommend leaving a device that looks quite like this laying around in an airport or anything.

With both cameras streaming away, the battery lasts a little over two hours.

Then came a dawning realization that my clever indirection had only really passed the buck, as now I needed to get video from the Raspberry Pi to the iPhone.

The Quest for Low Latency — GStreamer — Pipeline Problems

The main concern with the video pipeline was latency. I needed to stream at least a 640x480 video at a minimum of 60 frames per second, all with the lowest latency possible. Then multiply that by two. Many tutorials on Raspberry Pi streaming refer to half-second delays as low latency. That just wasn’t going to cut it.

To further complicate matters, having recently contracted a quite debilitating case of compiler fever, writing a native application to view these streams was strictly out of the question. The stream needed to be something that Safari on iOS could handle.

Motion-jpeg (mjpeg) turned out to be a great fit on all fronts. Most desktop and mobile web browsers can view mjpeg streams natively and, because the stream lacks keyframes, mjpeg video is low latency and fairly cheap to encode/decode.

My first attempt at video streaming used gstreamer. Here’s a gstreamer pipeline that captures raw video from one camera, converts it to jpegs, wraps it in RTP, and sends the data to the viewer over UDP:

# Pi

sudo gst-launch-1.0 v4l2src \

! video/x-raw,width=640,height=480 \

! jpegenc ! rtpjpegpay ! udpsink host=VIEWER_IP port=5200

# Viewer

gst-launch-1.0 udpsrc port=5200 \

! application/x-rtp, encoding-name=JPEG \

! rtpjpegdepay ! jpegdec ! autovideosink

While this works, there are a few key problems. For one, it’s collecting YUV images from the camera instead of jpegs. At 640x480, YUV is limited to 30fps while jpegs can be captured at 120fps.

Knowing precisely nothing about gstreamer, a bit of searching revealed that it should be possible to sample jpegs directly, like so:

sudo gst-launch-1.0 v4l2src \

! image/jpeg,width=640,height=480 \

! rtpjpegpay ! udpsink host=VIEWER_IP port=5200

Yet, no matter how I tweaked the pipeline, I kept hitting a crash like this. image/jpeg just never worked properly for me.

Then there is the issue of viewing the stream in a web browser. For starters, we can try replacing UDP with TCP:

# Pi

sudo gst-launch-1.0 v4l2src \

! image/jpeg,width=640,height=480 \

! multipartmux ! tcpserversink port=5200

# Viewer

# In my understanding, there should be a `multipartdemux` here

# but that never worked in my testing

gst-launch-1.0 tcpclientsrc host=PI_IP port=5200 ! jpegdec ! autovideosink

However, this stream still cannot be viewed directly in a browser since the data is actually a HTTP multipart message. There are workarounds, but all those add more complexity and more latency.

While gstreamer is incredibly powerful, it was just not the right tool for me. Although with proper tweaking, and by rolling your own client code, gstreamer can probably deliver a higher quality stream than what I ultimately achieved, I just wanted to quickly throw together a prototype that was “good enough”. That’s where mjpeg-streamer came in.

Mjpeg-Streamer Saves the Day — Crossing the Streams

As its name suggests, mjpeg-streamer does one thing and it does it well: stream mjpegs.

Here’s the command to stream 640x480 mjpeg video from a single camera to a webpage:

sudo ./mjpg_streamer \

-i "./input_uvc.so -r 640x480" \

-o "./output_http.so -w ./www"

This stream is available on http://localhost:8080?action=stream_0 by default.

To stream video from two cameras, simply tack on another -i argument and specify the video devices explicitly:

sudo ./mjpg_streamer \

-i "./input_uvc.so -r 640x480 --device=/dev/video0" \

-i "./input_uvc.so -r 640x480 --device=/dev/video1" \

-o "./output_http.so -w ./www"

Now camera one is on http://localhost:8080?action=stream_0, while camera two is on http://localhost:8080?action=stream_1.

With two 640x480 streams running, mjpeg-streamer maxes out at around 50% CPU usage:

mjpeg-streamer really met all my needs and was super easy to setup. What’s not to like.

Wireless Letdown — Of Bands and Widths — The iPhone Connection — A Lightning LAN Party — 100ms Glass to Glass—Coding Capitulation in the form of UIWebView

Up until this point, I had been working with the Pi plugged into ethernet, and everything was working great. The video streaming from the Raspberry Pi to Safari on a computer or iPhone was responsive and smooth, with minimal latency. But when I unplugged the Pi and tried running it over wifi, things quickly came to a stuttering halt.

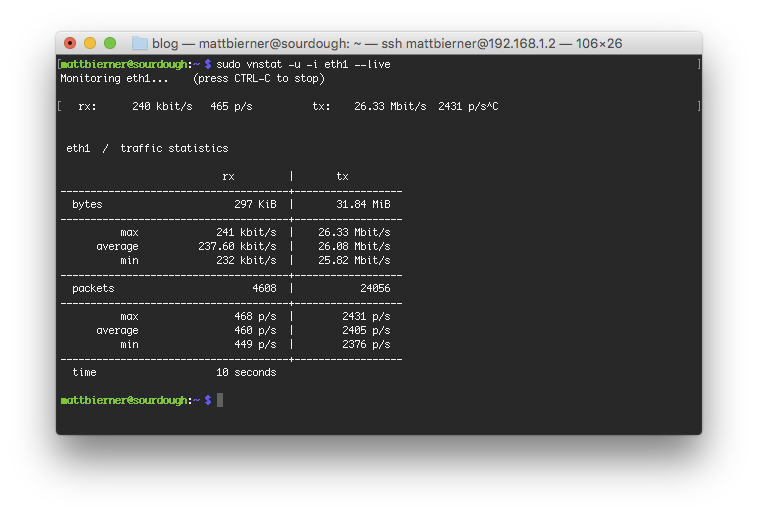

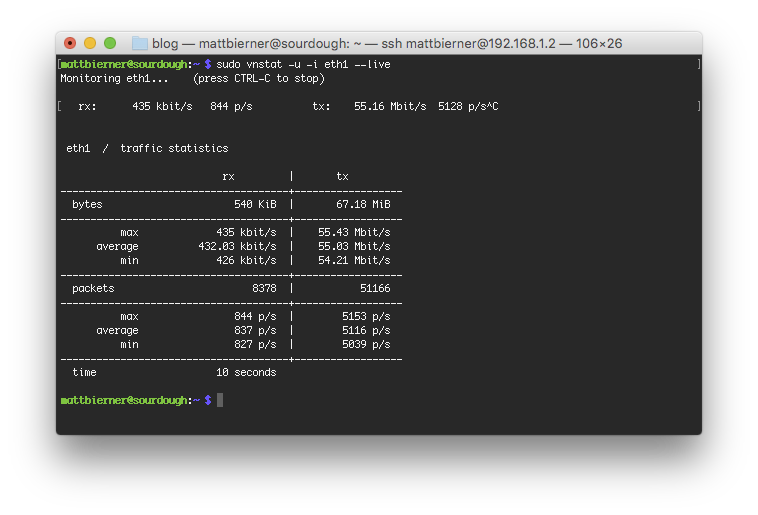

Considering this difficulty, it may be worth taking a quick detour to look at how much bandwidth mjpeg-streamer normally requires.

Two, 800x600 streams at 60fps average 26Mbps:

Two lower resolution 640x480 streams at 120fps actually require about twice the bandwidth, despite only transmitting around only 1.3 times as much pixel data per second:

55Mbps should be perfectly attainable on a wireless network these days, yet I was unable to get a reliable stream directly from the Raspberry Pi. It’s entirely possible that I was using the world’s worst wifi dongle™—and I certainly wasn’t working in uncongested airspace—but I couldn’t even get a single, lower resolution stream to work without noticeable stutters and latency. I needed a wired connection.

Apple locks down wired iPhone accessories pretty hard but, given that the Raspberry Pi is a normal computer, there is a backdoor we can use to get an iPhone talking directly to the Pi over a lightning cable: the personal hotspot.

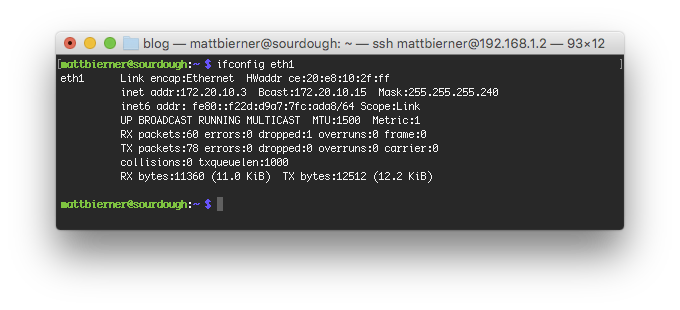

First, I configured the Raspberry Pi to use my iPhone as a personal hotspot. Dave Conroy has some good instructions on setting this up. I found that only the first part of the instructions (up to editing the /etc/network/interfaces) was required. Now when you tether an iPhone to the Raspberry Pi with a lightning cable, the phone should automatically establish a personal hotspot.

More importantly for this application, the iPhone and the Raspberry Pi are now on the same local network.

Simply plug 172.20.10.3:80 into Safari on the iPhone, and you’ll be connected to port 80 on the Raspberry Pi.

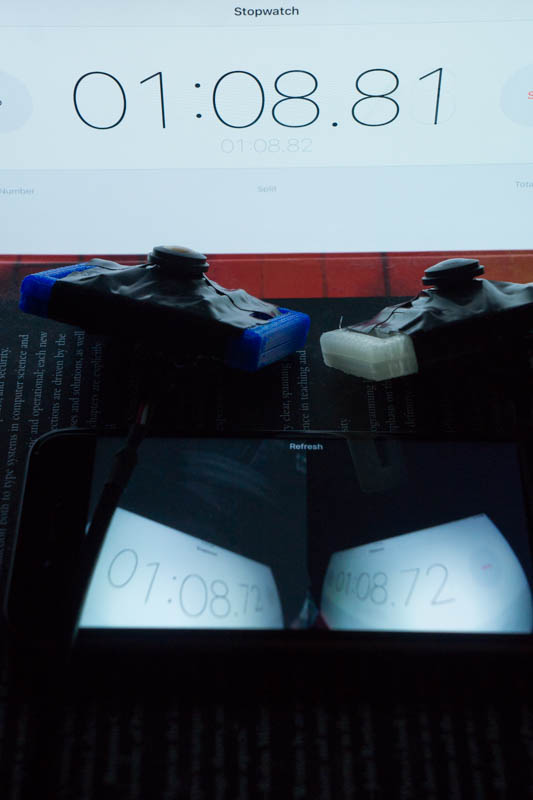

With the iPhone plugged directly into the Pi and both cameras streaming away at 640x480, the glass-to-glass latency was stable around 100ms, with no stuttering or dropouts:

A reliable wireless connection would be preferable, since eliminates the need to tether the VR headset to the Pi but if you can’t make that happen, this is a pretty good workaround.

Here’s what a webpage to view the two streams looks like:

<html>

<head>

<title>My Eyes</title>

<meta name="viewport" content="width=device-width, initial-scale=1.0, maximum-scale=1.0, minimum-scale=1.0, user-scalable=no">

<style>

html, body {

height: 100%;

min-height: 100%;

width: 100%;

margin: 0;

overflow: hidden;

}

img {

display: block;

height: 100%;

width: 50%;

float: left;

/**

I always mounted the cameras upside down, so the image

must be rotated.

*/

transform: rotate(180deg);

}

</style>

</head>

<body>

<img src="http://172.20.10.3:8080/?action=stream_0" />

<img src="http://172.20.10.3:8080/?action=stream_1" />

</body>

</html>

You can load this page in Safari just fine, although there are two minor problems: the browser chrome sometimes does not hide itself properly and, for god knows what reason, an iPhone cannot be locked in landscape orientation. So, to finish the setup, I braved compilation once more to throw together an extremely simple UIWebView iOS app that display the streaming webpage fullscreen in landscape.

Body Mounting — A Rather Singular Debugging Experience — Gloves vs. Straps — Tape, and Lots of It

For the first iteration of this project, I mounted the Raspberry Pi on the front of my chest with a single strap.

While the chest mount works well enough on a man and provides quick access to all the ports on the Pi—and, I must add, plugging an ethernet cable essentially into your body and sshing on in to debug your vision is a rather singular experience—I later developed a backpack mount that is far more practical and far more comfortable for both men and women to wear. You may notice this backpack mount in some of the photos/videos, and I’ll detail it more later.

I tried two ways of mounting the cameras to my hands: straps and gloves.

Of the two, the straps are by far the better approach. The gloves are generally a pain to get on and off, and do not hold the camera firmly in place (unless you wear the straps under the gloves, which kind of defeats the purpose).

Of course, those negatives must be weighed against the fact that, after cutting the fingertips off said gloves and slipping them on, you feel like the most 80s hackers of 80s hackers. That’s why you’ll notice that in most of the photos I’m using the gloves, and not the far more practical straps. Vanity. All is vanity… Don’t be a Lucas. Just use the damn straps.

To protect the cameras, in addition to their cases, I encased them in electrical tape. The cameras do heat up while in operation and the palms of your hands do get sweaty. I also encased the entire Raspberry Pi in multiple layers of electrical tape, except for the ports. Bodily fluids and electronics generally don’t mix.

I secured the wires from the hand-cameras to my arms with athletic tape. Let me tell you, make sure to get at least a three pack of that stuff. Any slack in the wires has a fun habit of snagging on anything and everything around—an altogether jarring experience as none of these wires are quick release. The wires from the cameras were just long enough to reach the Pi mounted on my chest, without limiting my range of motion.

Thoughts

Wiring into this system does have a satisfyingly cyberpunkish feel. There’s none of the plush or polish of an actual consumer product, just wires and electronics taped and strapped to flesh.

I love my gloves. They’re so bad.

All suited up, and with the Synthwave blasting, I even thought I was looking pretty rad, until such grandiosity was rather unceremoniously shattered by an onlooker’s offhand remark. So it goes. As with bouncy castles, and life in general, the observer only sees what is before them and can never truly grasp the thrill of being inside.

The entire setup is pretty hacked together and could doubtless be improved upon, but it works and it works better than I could have anticipated. The software and hardware were flexible enough to let me try out all sorts of fun experiments. You don’t need fancy hardware or the backing of a giant corporation to explore the boundaries of technology. Never know what is possible. Never be scared to fail. Never stop trying.

First Steps in a Strange New Reality

Before discussing the experience of viewing the world through my hands, a quick digression: what do I mean by reality-modding or modded reality? Why be such a pompous motherfucker instead of using perfectly good and familiar terms such as virtual reality or augmented reality? Well, I believe that neither of those accurately capture what this project does.

Virtual reality simulates reality, while augmented reality overlays onto reality. As such, streaming video to your eyes probably technically falls within the continuum of augmented/mixed reality, but the word augment just feels wrong. It’s too damn positive for starters. The far more academic terms mediated reality or modulated reality are perhaps more accurate, yet both are so decidedly unromantic that the short hop to modded reality was all but a given.

I prefer the term modded reality because of its association with various modding scenes (game, car, body—take your pick). At its core, this is a project about tweaking, altering, and hacking one’s subjective experience. The technology component is not important so much as the (for lack of a better phrase) modder spirit behind it. Given such a broad definition, almost every human activity falls within the purview of reality modding, but I still like the term and am sticking to it. And besides, I probably really am one pompous motherfucker.

One Hand — An Entirely New Vantage — Detachment

For my first experience, I mounted a single camera to my right hand and streamed its image to both eyes. This was a good starting point and allowed me to test the basic implementation of the system, while also easing in to this new perspective.

Opening my eyes for the first time, I was greeted by a surprisingly normal view. I was sitting in front of my desk, with my right palm held outward to face the camera towards the room around me. None of my body was in the shot so it was just like looking at a low quality video stream of the room.

I then tentatively began to move my hand around. The latency and camera movement actually proved far better than expected. For whatever reason, I had always imagined that video from a hand mounted camera would be extremely shaky, ultimately leading to the aforementioned projectile vomiting. This was not the case. Slowly sweeping my hand around to observe the world, the motion was surprisingly fluid. The camera’s wide field of view helped reduce the impact of any shaking that was present, and the 60hz refresh rate meant that even rapid movements felt smooth. Even when I unthinkingly reached up quickly with both hands to adjust the headset, the camera motion never felt unconstrained.

Growing bolder, I waved my right hand around more rapidly to examine latency. The 100ms delay is there to be sure, especially if you are actively looking for it, but it never felt like a limiting factor. Playing around with some bric-a-brac on my desk, I never felt out of sync with the world.

Having examine my immediate surroundings, I next turned the camera on myself. I never much like watching myself on video, so observing myself in realtime was strange, especially when looking upon my body from such an odd vantage. This situation was made even stranger by the fact that my face was mostly obscured by the VR headset and I had a bunch of wires taped to my body.

I’ve observed myself in realtime using mirrors of course, and I’ve also observed myself after the fact in videos or photos, but those views are usually limited to a few specific angles and perspectives. The view from my hand was an entirely new one. It’s close up and intimate, while also unfamiliar and very depersonalizing. Inspecting my head and body, I did not feel I was looking upon my own body so much as that of some cyborgish Brobdingnagian.

This sense of detachment was made more acute by the perspective. My eyes normally root my understanding of my body’s position in physical space. The eyes are also where I tend to place my “self”. In my mental model, I know that below my eyes is my nose, then my mouth and chin, then my neck and shoulders, with my two arms sprouting out at any number of crazy angles, and so on. It all begins with the eyes.

Shifting my point of view broke this whole model. It wasn’t even so much that I was seeing the world through my hands, although that was odd, but that I was seeing the world from somewhere that was not my eyes. The eyes-then-nose-then-mouth-and-so-on logic that had worked so well for all these years suddenly didn’t work anymore, but my brain cared little for such nonsensicality and happily continued shortcutting along with its old mental model.

I had to consciously remind myself that my head was not, in-fact, located behind the camera. Instead, I had to think about where my hand was in relation to my body and work backwards from there. The process generally went something like this: starting with my head, determine the relative position of my right hand; examine the view from that hand; and then work backwards to determine the position of my head in real world space.

Over time, I grew a little more comfortable wearing the setup, but it never started feeling natural. That’s hardly to be expected though. After all, I’ve spent something like 200,000 hours (has it really been that long?) viewing the world one way, and only a few short hours looking upon it through my hands.

Two Hands — Right Eye Domination — Of Ghosting and Picasso — Life From a 3rd Person View

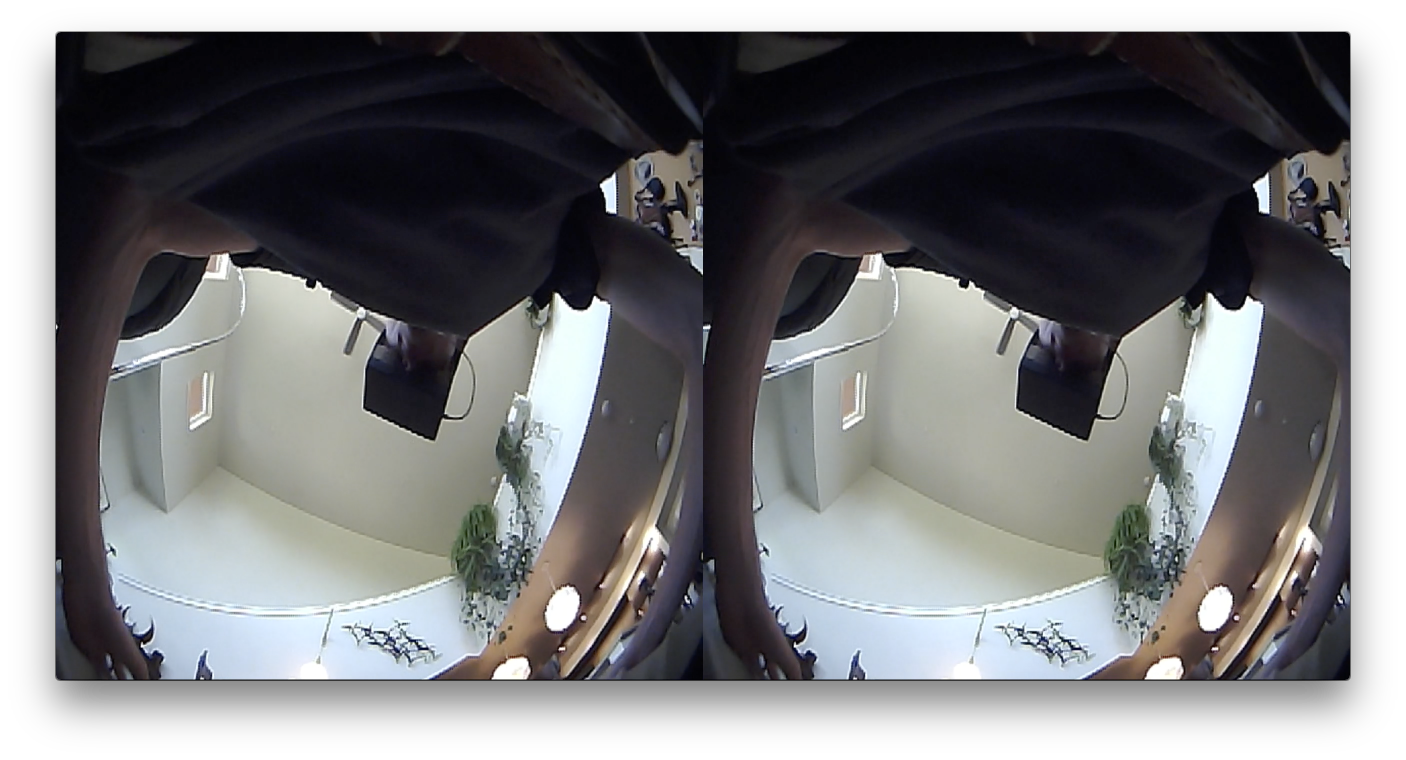

Having taken my first shaky steps viewing the world with one hand, it was time to go full Pale Man and hook a camera up to each hand. As may be expected, the experience was extremely disorienting but also extremely intriguing.

For this setup, I strapped a camera to each hand and streamed the video from each camera to the corresponding eye. The rest of the system remained unchanged.

I immediately realized that my brief time exploring the world with the single hand camera had done little to prepare me for this new existence. The core problem of this system is also its main selling point: that each eye is presented with a different image.

In waking life, close one eye and the other continues to work just fine, giving the illusion that your eyes operate independently. An unnatural situation like this though, where each eye is presented with different images, reveals that is not the case. What we perceive is actually a construct of the brain itself, unconsciously stitched together from raw sensory input and transformed into what we know as reality. This process is normally so transparent so as to go completely unnoticed, but tweak the input—push the values outside the expected ranges—and the man behind the curtain is revealed. And with these hand cameras, the brain is presented with a very unnatural set of inputs: two images that cannot be unified.

The ethernet cable was only used to capture the video. It is not normally required.

I’m right eye dominant, so, most of the time, my brain decided to only use input from my right eye and ignore the left. Such subconscious cyclopsism is most common when the eyes are presented with scenes strongly differing in color or brightness, such as peering around a corner into a bright hallway with one hand, while keeping the other hand gazing into a poorly lit room.

Although my right eye dominated most of the time, seemingly at random, I would switch to the left eye. With further experimentation, I began to gain some control over this behavior.

To switch eyes, I first identified which eye was currently dominate. This is easiest if you have some sort of reference point in the scene and know which hand is currently looking at it. The best reference I found was my other hand. In the video, you’ll notice me moving my dominate hand in front of the other and waving it about. Motion helps bring the first glimpses from the other eye into view, after which you can switch eyes fully through further concentration. It’s an awkward and unreliable process. Unless I remained focused on my left eye input, my right eye would always dominate the view.

Just as often, my brain would try to unify the images from both eyes, producing an odd ghosting effect. This made it difficult to tell where different parts of the unified image come from, and therefore where objects were in physical space. Was the object near my left hand, my right hand, or possibly neither?

Sometimes when viewing the same object from two different perspectives (or two similar objects) in what can be best described as Picasso vision, I saw the objects merged together into a crazy new form. This is unfortunately impossible to capture in photos or video.

For these reasons, walking about and interacting with the world was far more difficult than with the single hand-camera. The most common problem was understanding which eye (if any) was dominating my view. This sounds like it should be obvious, but I constantly found myself reaching out for ghosted objects that just didn’t exist where I thought or trying to use the wrong hand to interact with something. Whereas normal body movement is so natural as to go unnoticed, now every action had to be deliberate and thought out. I had to essentially relearn how to use my body.

I quickly developed a few coping techniques. One was moving my hands next to each other so that both cameras aim in the same general direction, creating a poor man’s binocular vision. This tends to produce a lot of Picasso because the eyes are seeing very similar, yet slightly different, scenes. The advantage though is that you no longer have to worry about which eye is dominating, since both hands are in the same general location. It’s a good reset point when confused.

Another technique is using one hand for interaction and the other as your single eye. I normally kept the non-eye hand aimed directly at my body, producing a dark scene which the brain will naturally filter out, leaving only the image from the viewing hand. Then, when I needed to flip a switch, open a door, or just grab something from my desk, I knew exactly where my interaction hand was and how to move it to reach out into the world.

It’s fairly difficult to keep your palms pointed outwards all the time. (Try sweeping out a full horizontal circle with both hands, palms outward, to see what I mean. You generally have to cross your arms over each other to accomplish a full 360). So, as I grew more comfortable, I began experimenting with more freeform hand views. Rotating the view adds another wrinkle to perception and was far more than I could handle at this point.

My personal favorite view came from raising both hands over my head and aiming my palms downward.

This creates a weird third person view that works very well with the fisheye lenses. It’s just fun walking around in the third person and viewing your body as if it were some avatar. Mounting the cameras above and behind the head using a pole or similar rig (perhaps even a small drone) would be even better, since keeping your hands raised above your head limits interaction and gets tiring pretty quickly.

Four Hands (sort of) — A Test of Picture in Picture Configurations — Finding a Stable Two Hand View

I next investigated different ways to present the two hand-camera video streams, while hopefully avoiding the ghosting and disorientation that plagued the first setup.

The most direct approach simply duplicates the image from both hands to both eyes. This took care of many of the original problems, but introduced new difficulties of its own.

There is a strong tendency to lock onto one of the views, while ignoring the other. It’s more like switching between two channels instead of actually using both views at once. While using one hand, I even found myself forgetting about the other hand entirely, until performing a mental channel switch. This made the overall experience similar to the one hand view I originally experimented with.

I really wanted to see if I could use both hands at the same time effectively, so next I tried reverting to the original two hand view, while adding a small picture-in-picture window at the bottom of the screen to show what the other eye was seeing.

As might have been predicted, this initial picture-in-picture setup made ghosting even worse. Now not only does each eye see a different full screen image, but it also sees a different picture-in-picture window. Instead of anchoring the view as I had hoped, this additional window just provides another distraction and source of conflicting information.

Much better results were had by using my dominant hand as the fullscreen view for both eyes, with my other hand for the picture-in-picture.

This eliminates the ghosting problems and is probably the most usable configuration of the lot. The view reminds me of driving a car: most of the time is spent focusing on the primary view from the dominant hand, but, when you need to put the other hand to use, simply glance in the mirror, so to speak, to see what it is up to. Compared to the side-by-side view, the transition is far less jarring and did not lead to the same sort of channel lock-in.

Of all the views I explored, none allowed for true independent hand eyesight. Even the best of the two handed views, the duplicated picture-in-picture view, is the most usable only because it allows quickly glancing between views, not using both views at the same time.

For that reason, the original two handed camera system was really the most intriguing. Don’t get me wrong, it was completely unusable and made me feel somewhat sick, but the blended visuals and cubists perspectives were a novel take on reality. It’s the one I’m most interested in exploring further.

Taking Off the Headset — Reality is Broken — Stratton’s Experiments

Perhaps the strangest experience came not while wearing the headset, but after taking it off. Anyone who’s spent any length of time in virtual reality knows how jarring it can be to return to the real world. Although this headset is just an altered view of reality, instead of an outright replacement, the experience of returning is just as jarring, if not greatly more so.

I first noticed this phenomenon after a twenty minute immersion with the single hand-camera. After walking all over my apartment, bumping into walls, knocking over stuff, and generally causing a ruckus, I was starting to feel a little sick. The nausea really hit me after I took off the headset though. Reality felt different in some indescribable way, as if I had stepped into an old PBS or BBC TV drama with their unplaceable aura of fakery. Looking through my normal eyes, the perspective, the movement, and the image-quality all just felt off.

Slipping back on the headset for a moment, it’s not like that felt like reality either. The view from my hands remained just as bizarre as ever. Nothing felt real anymore.

This sensation grows stronger the longer you spend immersed, and can last a fairly significant period of time. I believe the look and feel of the video itself had a greater impact on me than the odd perspective. A two hour session using the two hand-camera setup put me out of it for the rest of the day.

Stratton’s experiments with inverted vision showed that, given a few days, the human brain can adapt to some altered views of reality. This makes me wonder if binocular vision is hardwired in the human brain or if it can it be unlearned. Having little formal knowledge of neuroscience, and having obtained precisely no degrees in the field, it is my expert opinion that, in such a situation, the most logical result would be that the brain would adopt by ignoring input from one eye, instead of suddenly gaining the ability to view each eye independently. This wasn’t something I was able to test though, and have probably only spent around six hours so far looking upon the world through my hands.

Further Experimentation

After a few nauseous trips gazing at the world through my hands, it was time to explore reality from some other bodily vantages.

Body-Cam — A Pi Backpack Mount — First Person Arm Simulator

Given the recent rise in body-cam footage, for my next experiment I strapped a single camera to my chest, at about the midpoint of my sternum.

The latest in men’s fashion

For the Raspberry Pi, although the simple chest mount I used for the hand-camera system works well enough on a man, it is uncomfortable for a woman to wear. It also was in the way for mounting the body-cam. Simply moving the mount below the breast doesn’t work either, as that part of the chest expands and contracts with breathing, and there is little to stop the strap from sliding up and down. I also tried rotating the Pi to the side of my chest, under the arm, but this has the same fundamental problems, while also placing the Pi in a rather dangerous sweat floodplain.

The best setup turned out to be wearing the Pi like a backpack, with a strap over each shoulder and under each arm.

Such a Pi backpack is even kind of cute, in a Banjo Kazooie type way, and really frees you up nicely to interact with the world.

For the body-cam, I simply duplicated the same view for both eyes. Aligning two cameras properly for stereoscopic vision is difficult without a mounting plate, and misalignment is far worse than being limited to a two dimensional view.

After the experience of using my hands for eyes, the body-cam view was actually surprisingly normal at first. It’s basically as if you’ve shrunken a foot or two, while also loosing the ability to turn your head.

Your body and head are not normally in the frame. When you do reach out your hands, they appear suspended in at roughly eye level, a view not unlike your typical first person arm simulator (FPaS). Interacting with the world using these detached arms was incredibly entertaining.

The biggest challenge is depth perception. The fisheye lens combined with the odd new perspective made judging the distance from my body to objects very difficult. Countless times, I’d reach out for a chair, expecting it to be a few feet away, only to jam my fingers against it. Or, trying to grab something off the countertop, I’d slowly reach out, extending my arm farther and farther until it was completely straight, only to realize my target was still a good two feet away. Properly aligning your arms is also difficult since you are looking out at them, instead of down on them.

Just with with the hand cameras, naturally at first you’ll want to turn your head to adjust the view. It takes time to learn that you must physically turn your entire body to alter the direction you are looking. You also have to relearn where your body is in physical space all over again.

The body cam was just a lot of fun. It’s completely ridiculous, but not so entirely foreign as to be unusable. It also provided a segue into my next experiment…

Inspiration by Way of Naked Lunch — Reconsideration — A Slightly Less Worse Idea

The next inspiration came from Naked Lunch, a not altogether advisable source of personal-guidance, but certainly an entertaining one. The book contains a famous vignette about The Man Who Taught His Asshole to Talk that I recommend checking out. The main lines of interest were:

Thats one thing the asshole couldn’t do was see. It needed the eyes.

—Naked Lunch

Casting aside potential figurative interpretation, this seemed like a fine direction to explore.

However, upon reflection, I realized that literally viewing the world as an ass was quite possibly the worst idea ever. And furthermore, such an assward view is not exactly cutting edge—with a small, but sizable, segment of the population having thoroughly explored the asshole perspective on life for years now.

So instead, I decided to advanced this little experiment forward a few stages of psychosexual development to the next logical zone: the genitals. As had already been well established, this was not the worst idea ever, although it is perhaps a close second. Plus, if I didn’t do it, someone was bound to eventually (if they haven’t already, I honestly never know what to google to determine such things).

In Which I Gaze upon the World from my Navel

Yet such an experiment implies more than just looking upon the world—a perspective which is little more than a lower version of the body-cam mount discussed earlier. No, such an experiment also implies an exploration of sexual activity. (Skipping right on through to the psychosexual endgame, although some may debate that.)

So, at the risk of taking this blog into rather salacious waters—and with a near certainty of providing far more insight than is advisable, or altogether desirable, into the extracurriculars of your’s truly—allow me to recount the experience a partner (who we’ll abbreviate as “C.” for purposes of this discussion) and I shared with modded reality sex. The whole thing was so bizarre that I feel that it is worth recounting. I will try to document things, while hopefully avoiding the braggadocio, grin-winkery, and general Seth Rogenity that usually accompanies such a topic.

Setup — Mounting The Camera, Climbing Harness Style — More Tape

The main challenge was coming up with a system that would keep the camera securely mounted in place, while also not proving terribly uncomfortable or getting in the way too much.

Mounting a camera directly to the genitals seemed ill advised, so I instead opted to use a modified version of the body-cam setup to mount the camera somewhere below the navel.

Ready for my Time Magazine cover shoot

One longer strap goes around the waist, while two leg straps provide additional stability. The overall effect is similar to a climbing harness. Properly tightened down, the camera mount is not that uncomfortable, and works well for both females and males. You can easily adjust the vertical positioning of the camera depending on your tastes, although we ultimately both settled on positioning it slightly below the belly button.

The leg straps proved to be the most troublesome part of the whole setup. No matter the adjustment, unless you lay perfectly straight, these straps were either tourniqueting your legs all to hell or completely falling off. To combat this, I added another pair of small straps to connect the back of the leg loops to the back of the belt, plus a carabiner to hold these straps together near the belt. This alleviated the original problem, but also caused the camera to tend to angle itself upwards. It must be manually adjusted every so often.

I forgot the carabiner for this photo. It goes between the two leg straps, near the belt. I’m wearing it in the video later.

In the end, everything sort of worked, but I’m pretty sure there are better ways to accomplish this. Using softer, more elastic materials certainly wouldn’t have hurt for one, and actually, a cheap climbing harness probably wouldn’t work all that poorly either.

For the Raspberry Pi, we used the backpack setup detailed for the body-cam. It is important to remember never to lay directly on your back while wearing it though, at least without a pillow or similar soft support around it.

It’s also perfectly possible to forego mounting the Pi to your body at all and just rest it on the bed or fasten it to a nearby post. This is much easier using a USB hub to extend the camera-to-Pi distance (because, at this point, why not throw even more hardware into the mix), and reliable wifi streaming, or a 2 to 3 meter lightning cable, for the headset.

The entire setup is cumbersome and rather fragile. There’s nothing less romantic than suddenly going blind because some wire came unplugged or, worse, having the camera come loose and start flailing about in a most nauseating manner. Such are the challenges of ad-hoc reality modding. Keep a laptop and ethernet cable at the ready for debugging (usually just restarting the streaming server or iPhone app).

The wires themselves are another hazard. We went through a simply staggering amount of tape just trying to hold the damn thing together and keep the wires from forming loops that would get caught on things.

Further Practical Considerations — General Thoughts on VR Headsets

A few more considerations to discuss.

The camera works best in fairly bright light and, even with indirect daylight filling the room, shadows get in the way (a problem made far worse when using overhead lighting). Adding a few lamps next to the bed in addition to the ambient daylight worked well enough, but bright spots in the scene can cause the camera to over-adjust the exposure. It’s also not a perfect solution, although sometimes it is better not to see a perfectly clear picture.

The physical experience of having sex while wearing a VR headset was also odd to say the least. Let me cover the physical aspects of wearing the headset itself now, and discuss aspects specific to this project in a moment.

Whereas a blindfold increases awareness of your body and attunes the other senses, wearing a headset produces a strong feeling of detachment and unreality. Everything feels out of sync. I imagine a true, immersive VR environment would make the problem even worse (although I cannot speak directly to this).

And, I must say, the headset itself does manage to get in the way quite a bit. Kissing requires Spiderman-type contortions and I never got a good sense of where my head (or the rest of me for that matter) was located in physical space. Slow, super deliberate movement was all I ever felt comfortable with. Even then, I was constantly head-butting things because I forgot that I had what amounted to a cardboard brick strapped to my face.

But all of that is when you are wearing the headset. When your partner has the headset on, things are just as odd.

There’s something extremely off-putting about not being able to look your partner in the eyes. I never realized how important this is until it was taken away. Again, it’s not at all like a blindfold. A blindfold merely covers the eyes while still suggesting the face underneath. A VR headset on the other hand masks the entire top of the face—from high-forehead to lower nose— with a brick, leaving the mouth exposed. This is not attractive. Frankly, it makes the other person seem less vibrant and alive, even less human somehow.

Wiring Up — A Real “Now What” Moment — Freudian Doom — Image Quality — Admitting Defeat

Now, the concept of mounting a camera to one’s crotch and viewing the world from this vantage was certainly not lacking in the self-aware, tongue-in-cheekiness department. Both C. and I well knew this going in. And it goes almost without saying, but a lighthearted, more exploratory mood is essential if you’re hoping to get anything positive out of the experience.

To start with, the setup is not something that can be thrown on spur of the moment. Just properly putting on the equipment takes around ten minutes, even with assistance and prior experience, not to mention all the planning work and organization required beforehand (lighting, batteries, setting up the Raspberry Pi and camera, etcetera).

I started with the headset+camera, before switching with C.. We finished by exploring a few other configurations.

Somewhat confident that the camera mount was just about as comfortable and secure as it was ever going to be, I nervously glanced around one last time, and slipped on the headset. Then came an awkward “now what” moment. Having dreamt up this idea and put it into execution, now that things were actually happening, I realized that I may not have thought things through as much as could have been advised.

Any mood that had existed when we started was now thoroughly dead, choked by straps and smothered in athletic tape, leaving something that felt almost clinical. This was heightened by an almost immediate and profound feeling of dissociation and derealization.

I originally mounted the camera too low, right above the pubic area, and it didn’t take long to realize that this camera mounting position is not the most practical. The initial view that greeted me was rather bizarre: think 1993 Doom, but with a penis as the gun. I’m not trying to be funny or anything, that’s literally the best analogy I can come up with (but I must note that, once experienced, such a view really makes one see certain genres of entertainment in a whole new light). The perspective is all distorted though, so, most of the time, it just looks like there is some fleshy appendage swinging all about at the bottom of the frame. All attempts to explain what I was seeing sent C. sniggering.

The rest of my body was not normally in the shot, except when the camera angled too far upwards. Sticking out my hands was a similar experience to the body-cam, but it was even more difficult to judge depth and orientation since my hands were even farther from the camera and I now was looking up at my arms.

Having your arms be above your eyes is weird…

My first look at C. proved to be just as odd. I’m not sure if it was the fisheye camera or image quality or what, but the view was not at all what I expected.

We both started standing on our knees. In this position, the camera centers at the level of your partner’s navel. Her body was all distorted by the fisheye lens and by the unfamiliar angle though, with the greatest distortion near the edges of the frame. This is none too flattering, although I think a larger part of the problem is the image quality itself. It’s reminiscent of a 90s home video, but with an uncanny, hyperreal quality—you can’t help but feel a tad voyeuristic watching it.

Trying to gain a better view of the expression on C.’s face (it being, without doubt, one of complete horror), I also became aware of a rather fundamental limitation: I could not look up. Or down for that matter.

When something on the edge of vision caught my eye, I naturally tried to center it. But what would have been a quick turn of the head before now required pivoting my entire body to line my hips up with the target. And that’s only the horizontal aspect. Looking up and down just doesn’t work. This was bad enough using the body-cam, but at least there you could lean forwards or backwards to control the view.

Try standing up now and leaning forward while observing how your hips pivot, and specifically how the area below the navel moves. There’s some movement, but until you’re bending far enough forward to almost touch your toes, the navel area remains pretty much perpendicular to the ground. This meant that looking up or down required me to lean my entire torso forward or backward. Even with support, this was difficult and resulted in some yogaesque contortions.

Given these difficulties, I conceded that there was pretty much no way I was going to be able to move freely about without terribly injuring someone (and I was not altogether looking forward to explaining the circumstances leading up to that emergency room visit). So instead, I remained in more or less in fixed positions for the rest of my time wearing the headset.

For her part, C. seemed thoroughly tickled by some of these difficulties, although she was far more understanding after later experiencing what it is like to wear the headset. She now started experimenting with the camera and seeing my reaction, and this was just what was needed, as we slowly progressed into exploring what worked and (more often) what didn’t with this system.

Intercourse; A Scientific View of the Situation — Observer, not Participant — David Hasselhoff

As is sometimes the case, more direct sexual activity proved less interesting than everything surrounding it. Honestly, the most enjoyable part of the experience was just exploring this strange new take on reality in an intimate, yet playful way with another person. But allow me to share a few general observations, without getting caught up in distracting details.

The primary motivation of wearing the headset+camera is to explore the unique experience it offers, yet only a subset of sexual activities and configurations even integrate this view. For many, the camera is pointed at the bed or covered up or just generally getting in the way without adding any real value. In such cases, there’s a real temptation to just slip off the headset entirely.

Having found a proper view, there is just as often a temptation to close your eyes. While the fisheye camera does well at dampening transverse type motion, it actually ends up amplifying the type of motion most favored by Alex and his droogs. Taken with the close-up view, warped perspective, image quality, and of course the subject matter, and the overall effect is not all that appealing.

Now even if one believes that a glimpse of the genitals is the most intimate and private of glimpses, this system presents a completely impersonal, perhaps even medical, take on the situation. The effect is disturbing on some psychological level, a reduction of sex to a mechanical, organic process. That may be accurate, but it’s not truthful.

The images presented by the headset always dominated my focus, making it very difficult to achieve the sort of “suspension of self”, for lack of a more accurate term, that generally accompanies such situations. I actually felt too present, the exact opposite of what one would expect, but present only as an observer rather than truly experiencing. Even my other senses felt muted, sounds muffled, sensation blunted.

Just as I was growing fairly convinced things could not be any more bizarre, Spotify decided that now was the perfect time for some True Survivor (although, I must admit that the entire playlist consisted exclusively of similar songs). Needless to say, even as background music, the brain cannot handle such sensory overload.

I was pretty ready to take the headset off.

Switching Roles — Getting All Existential — Of the Roles Themselves — Distance at Close Range — Borderline Unpleasantness

After resting a moment, we now switched roles, with C. taking the headset and camera, and I taking over as guide. That such a switch is notable at all is actually one of the flaws of this system. The ability to swap who is wearing the headset, and especially the camera, at any time would have been preferable, and I believe have made the entire experience more spontaneous, or at least less asymmetrical.

Whereas, at this point, I already had a few hours worth of experience using the headset with cameras on my hands or chest, C. was starting fresh. She therefore provided an interesting alternative take—although in retrospect, I think it is probably wiser to start elsewhere before working up to this stage.

With C. wired into the system, she now slid the headset on for the first time. As had been done for me, I waited while she explored the new view and relearned how her body worked from this new perspective. There’s nothing really to be taught, so you really just need to give someone time to discover things themselves.

To the observer, there’s a somewhat comic ineptitude to these first steps. You watch them grasp at objects that just aren’t there, become fascinated for a minute or two by some mysterious sight on their arm, and turn their head all about trying to adjust the view. Every motion is tentative, carrying with it a real sense of their distrust in what they are seeing. It’s even stranger than watching someone interact with virtual reality or augmented reality, because, in this case, the person is clearly interacting with normal reality but in this almost intoxicated manner.

Things turned from comic to somewhat uncomfortable when I sensed that C. was looking at me. Now, I had worn the headset and could imagine what she was seeing, but that didn’t prevent a sudden sense of scopophobia from sweeping over me. Without a pair of human eyes to look into, the sensation is, appropriately enough, more like being looked over by a camera.

After a few moments, C. said she was doing pretty well but she also agreed that a more stationary approach was required. Looking at her laying on the bed, the oddity of the whole situation really hit me. There’s an almost H. R. Giger quality to the wires and straps against flesh, and to the headset covering the eyes. We again took things slowly and tried out a small range of different experiences, some of which we almost instantly moved on from, and some of which even were somewhat enjoyable.

And now, one of the central problems with this system also became clear: the system essentially reduces the person wearing the headset to the role of receiver, with the ungoggled partner as leader. While there’s nothing strictly enforcing this, in our initial testing, the person with the headset on is generally so klutzy that that’s how it worked.

Even though the roles had now been reversed, there was still a real feeling of distance. In some of her observations before, and now while wearing the headset, C. spoke to this as well, but I was surprised that the feeling carried over even now that I was no longer wearing the goggles. We were so close together physically, and yet experiencing things so differently.

Something about the headset filled me with a dread of not knowing what C. was experiencing. That was completely ridiculous of course—no one ever knows what anyone else is actually experiencing—but not being able to really see her face, or even what she was seeing, really threw me off. I compensated by keeping up a rather continuous stream of narration and confirmation, which was initially welcome, but a few minor critiques later made it clear that such play-by-playism was really not necessary and, if anything, even somewhat mood-killing.

On the other hand, C. seemed to be growing more quiet. This was probably around twenty minutes after we started, and approximately 20 minutes longer than she estimated she would last wearing the headset. She explained that she while didn’t feel nauseous, at least not like she was really going to be sick, she felt off in some way, and that the headset was making her feel increasingly claustrophobic. The experience was getting to the borderline of being unpleasant.

Resting with the headset off, C. found it difficult to put her experience into words. Having a reference point helped me read into and understand some of the little terms or expressions she used. Reading between the lines, in some respects, she described a similar disorientation and unreality, but with a more prominent sense of feeling unwell. Her overall comparison was more like being in a dream-like fog. Sure there were moments that were enjoyable enough, but, for her, the entire experience was just odd, and the sex itself wasn’t terribly good either.

We also discussed how the system could be improved (beyond the obvious: make it more comfortable and reliable). One idea we kept coming back to is that both of us really should be wearing headsets, instead of all the swapping nonsense. Mounting a camera to a bedpost to create a stationary viewpoint may also be interesting.

Body Swapping — With Great Power Comes Great Responsibility — The Horrors of True “Self Love”

Although we did not have two headsets, based on one of C.’s idea, we agreed to explore one more configuration: one person wearing the headset and the other person wearing the camera. Wifi streaming really would have come in handy here, but we managed using the USB hub extension method mentioned before. While this was probably the most intriguing part of the afternoon, it was also perhaps the least enjoyable.

I now took the headset from C., while she continued to wear the camera, before we switched. By now, energy levels were kind of low. We were tired, somewhat nauseous, and suffering from the odd reality withdrawal described earlier. Plus we already kind of knew what to expect.

For the person only wearing the camera, the effect is little different then when your partner was wearing both the camera and the headset. The headset still gets in the way and makes them look like some cyborg, but now you also have the uncomfortable camera mount to deal with. And now, not only are you responsible for leading your partner, you are also literally their eyes.

For the person wearing the headset, compound all the problems with perspective and body-sense with the fact that you are now looking upon your body from an external view that you no longer directly control, and the result is not pretty. When C. moved that first time while wearing the camera, I had a real out of control sensation that I did not experience with any of the prior camera mounts. Any sudden movements by the camera wearer were usually accompanied by much jokey hollering to “stop moving”, the usual effect of which was to send both parties into giggling fits which did not at all help the situation. And the out of body sensation offered by all prior views pale compared to this setup.

This shot is obviously not from the session. It was taken from a demonstration with me sitting crosslegged on the ground with the camera mounted to my body. Hopefully it gives an idea of how odd it can be to look upon your body from this general sort of perspective.

Now, unless you are the most narciss of narcissists, there is nothing less erotic than watching yourself have sex using this system. This is not Horace’s mirrored bedchamber or some home video, where physical or spatial distance separate you from the act. As already discussed, this system presents what can be best described as a medical view of the situation and, given that even your partner does not look good in such a light, it is safe to say that you look infinitely worse.

To be more blunt: it’s basically you fucking yourself. And, although the psychologist may argue otherwise, I can’t help but doubt that such a literal form of “self-love” is actually all that appealing on any level, subconscious or not.

This mixed configuration was something that truly have to be experienced to be understood, but we did not linger. This was the only time in the whole experiment that I began to feel like I really was going to exorcist all over the place, and C., still recovering from her previous experience, was definitely not a fan.

Besides, by now the Raspberry Pi battery LED was angrily blinking red and the iPhone was hot enough that there was a real possibility that the Cardboard would spontaneously combust at any moment (we just had to choose afternoon on the hottest day of the year, didn’t we). And there’s only so much of such things that one can handle in a day.

All things considered, the system worked better than expected though. We only had to reboot the streaming server once or twice, and reload the webpage on the iPhone a few times more.

Thoughts on the Experience — Failings — Successes

As a tool to enhance physical intimacy or cliched eroticism, this system fails horribly. Tastes vary of course, but, even after taking into consideration any improvements to comfort or usability that could be made, the end result is just odd. On those levels, it made us feel farther apart rather than closer together.

But if we instead consider the far broader universe of human sexuality, and its interweaving and blending with all other aspects of existence, then this system does a simply marvelous job. Yes, it is awkward and uncomfortable to wear; yes, the headset is nauseating and disorienting; and yes, the view it presents can be unappealing, and yet there is something about it.

There are elements of BDSM to be sure—the trust and vulnerability; the roles and power dynamics; even down to the equipment (in the most broad terms) such as the straps and so on—but without all the corny ritualization and subcultureisms. No, perhaps one beauty of this system is that, when using it, you can’t take yourself too seriously and, in that, it strikes on a more fundamental truth: everyone just needs to lighten the fuck up. There’s a strange sort of intimacy to be found in embracing the magical absurdity of it all—whatever it is—but one that is far more profound. Perhaps I’m romanticizing, but C. and I experienced that something together—a shared and lasting experience that wasn’t necessarily all positive but certainly wasn’t all negative either. For my part, it’s strange, but I’ve never felt more human than that afternoon; it only took wiring up with cameras and computers, and engaging in carnal activities like any little ol’ ridiculous animal. Duality and all that.

And while I don’t doubt that modded reality sex—and perhaps, in the short-term, even systems very similar to this one—will be popular in the future, I hope such systems are never relegated to being simple sex toys; that’s far too boring. I wouldn’t have detailed any of this experience if I felt there wasn’t some deeper value to be had here.

Conclusion

While I’m not sure how novel any of this project is, I found it to be incredibly interesting. Exploring different takes on reality using the system was both disorienting and profound, and exploring this with another person was bizarre and magical. This system suggests a future where reality itself can be tweaked, remixed, hacked, and shared. My only hope is that such a future is one of modification, not augmentation.

At its best, technology empowers us to experience more, explore more, be more human. But, so often, the focus is instead on being better: smarter, more efficient, stronger, prettier, healthier, and bla bla bla. I say: leave such regressive fantasies to the scared old men who git religion because they are unable to come to terms with their mortality and ultimate inconsequentiality. Be more, not better.

Thanks C. You rock.